BigQuery - old

Setting up tables

Data in BigQuery typically resides in tables. When sharing data with Toplyne, the best practice is to create separate tables for event data and profile data partitioned on a time column.

Event data table

This table will capture a user's action; it will help answer the question, “what has a user done on your product, and when.”

Rows

Each row in this table represents an event triggered by a user.

Columns

USER_ID(Required): Key to identify which user has performed the eventACCOUNT_ID(required for account-level analytics): Key to identify which account the particular user belongs toEVENT_NAME(Required): Name of the eventTIMESTAMP(Required): Timestamp at which the event has occurredUPDATED_AT(Required): UTC timestamp when the row has been updated or added (not the event timestamp)EVENT_PROPERTIES(Optional): Event properties, typically shared as a JSON

with key/value pairs

Partition

Partition this table on TIMESTAMP column.

Sample Event Table

| USER_ID | ACCOUNT_ID | EVENT_NAME | TIMESTAMP | UPDATED_AT | EVENT_PROPERTIES |

|---|---|---|---|---|---|

| 1298 | 23 | Payment_Initiated | 2022-10-26 07:08:34 | 2023-01-09 17:08:34 | { "paymentid": 123, "amount" : 200, } |

| 1567 | 77 | Trial_Ended | 2022-11-01 20:01:14 | 2023-01-09 17:08:34 | { "accountid" : 77 } |

Profile data table

This table will capture any profile information a user or an account has; it will help answer the question, “who is this user or account?”.

Rows

Each row in this table is a unique entity.

Columns

USER_ID/ACCOUNT_ID(Required): Key to identify which entities' properties are listed in the row.USER_CREATION_TIME/ ACCOUNT_CREATION_TIME (Optional): To identify since when the user or account has been active.UPDATED_AT: UTC timestamp when the row has been updated or added (not the event timestamp)USER_PROPERTIES/ACCOUNT_PROPERTIES: Each subsequent column is a profile key; against it, you will have its value. For example, you may choose to include the email address, geographical location, account name, current billing plan they are on, etc.

Partition

Partition this table on UPDATED_AT column.

Sample User Table

| USER_ID | USER_CREATION_TIME | UPDATED_AT | Country | Current Plan | |

|---|---|---|---|---|---|

| 1296 | 2022-10-26 07:08:34 | 2022-11-26 07:08:34 | [email protected] | UK | Annual Plan |

| 2676 | 2022-11-01 20:01:14 | 2022-12-01 20:01:14 | _[email protected] | UK | Monthly Plan |

Sample Account Table

| ACCOUNT_ID | ACCOUNT_CREATION_TIME | UPDATED_AT | AccountName | Country | Current Plan |

|---|---|---|---|---|---|

| 23 | 2022-10-26 07:08:34 | 2022-11-26 07:08:34 | Queen | UK | Annual Plan |

| 56 | 2022-11-01 20:01:14 | 2022-11-26 07:08:34 | Beetles | UK | Monthly Plan |

Step-by-step guide to share

-

Login into your Google Cloud Platform account.

-

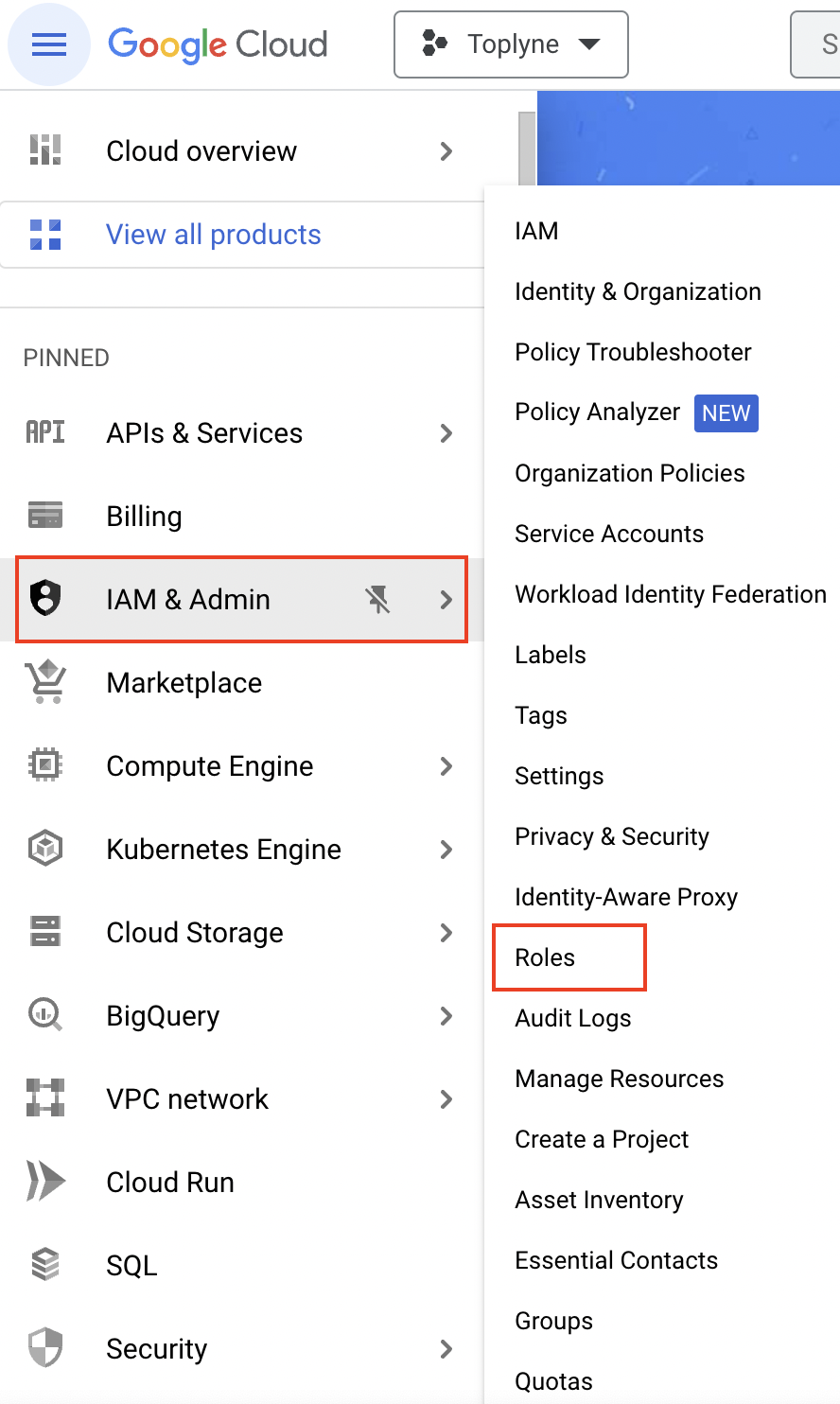

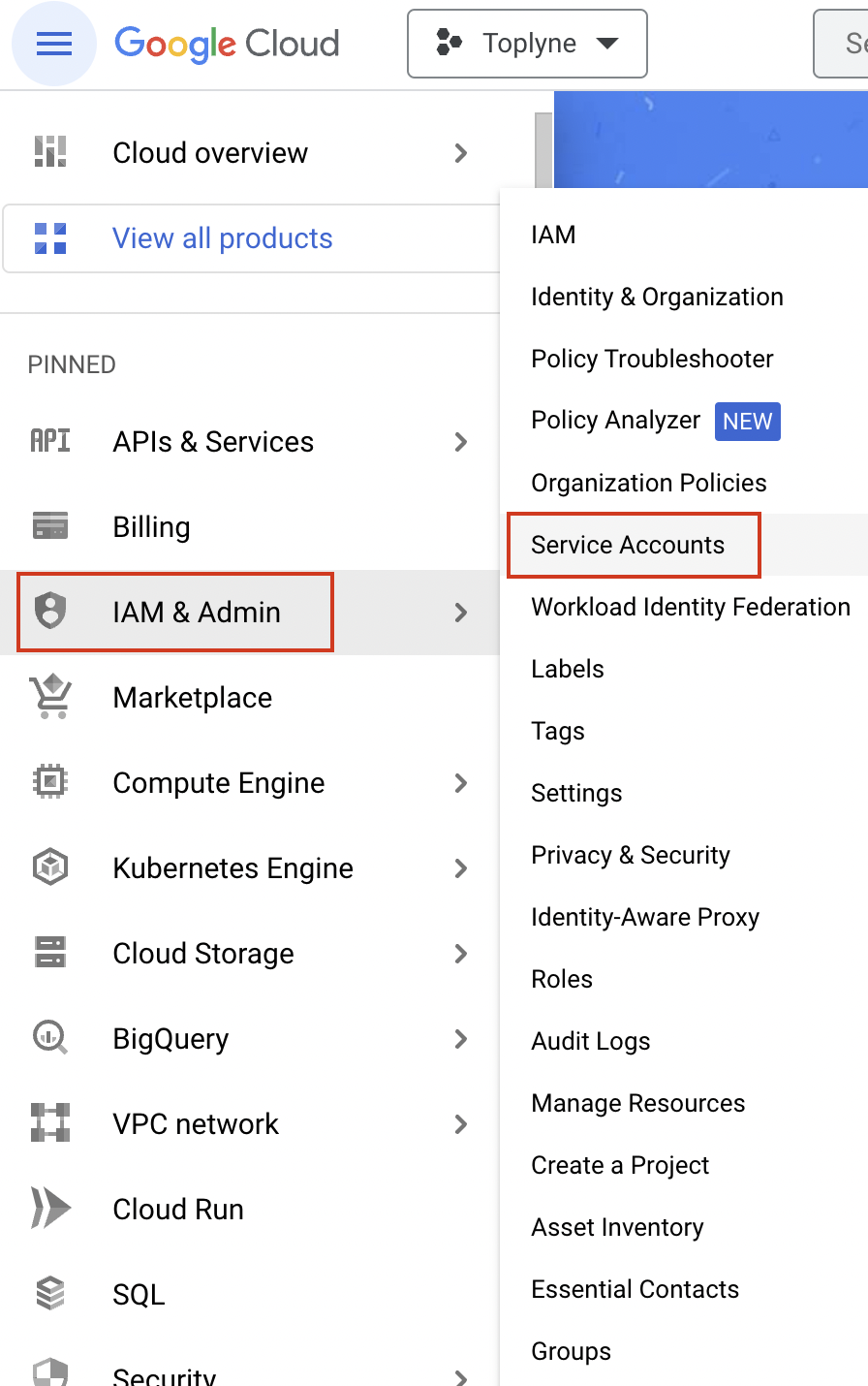

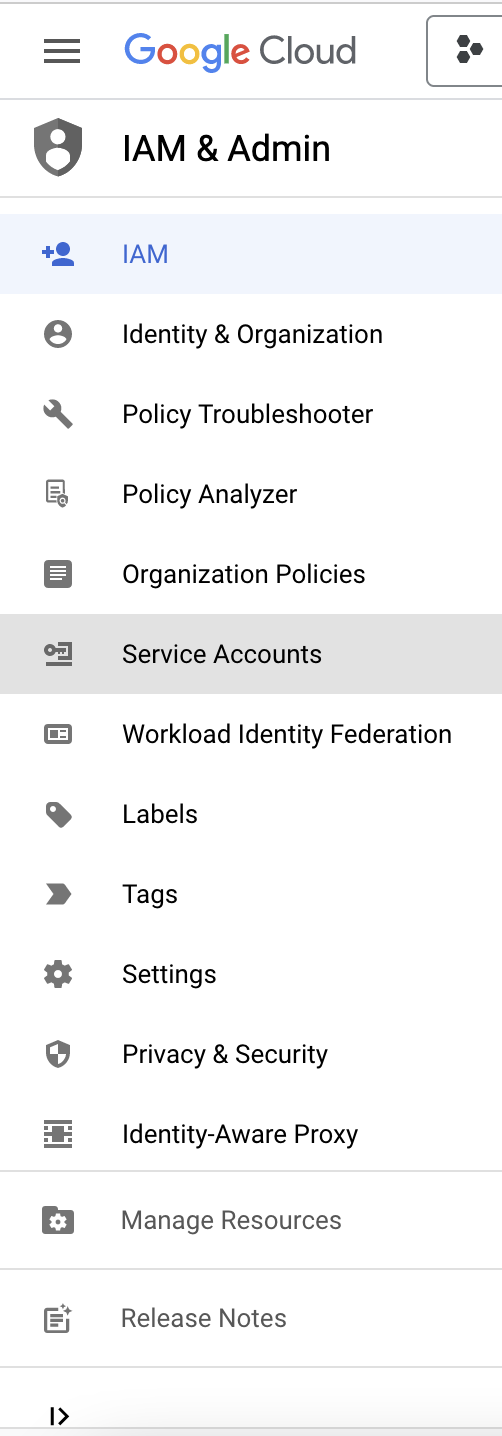

Go to IAM & Admin -> Click on Roles

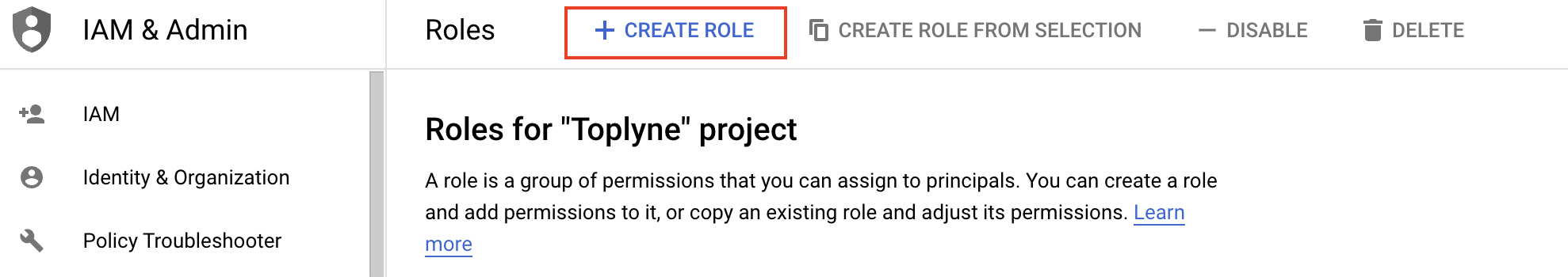

- Click on Create Role

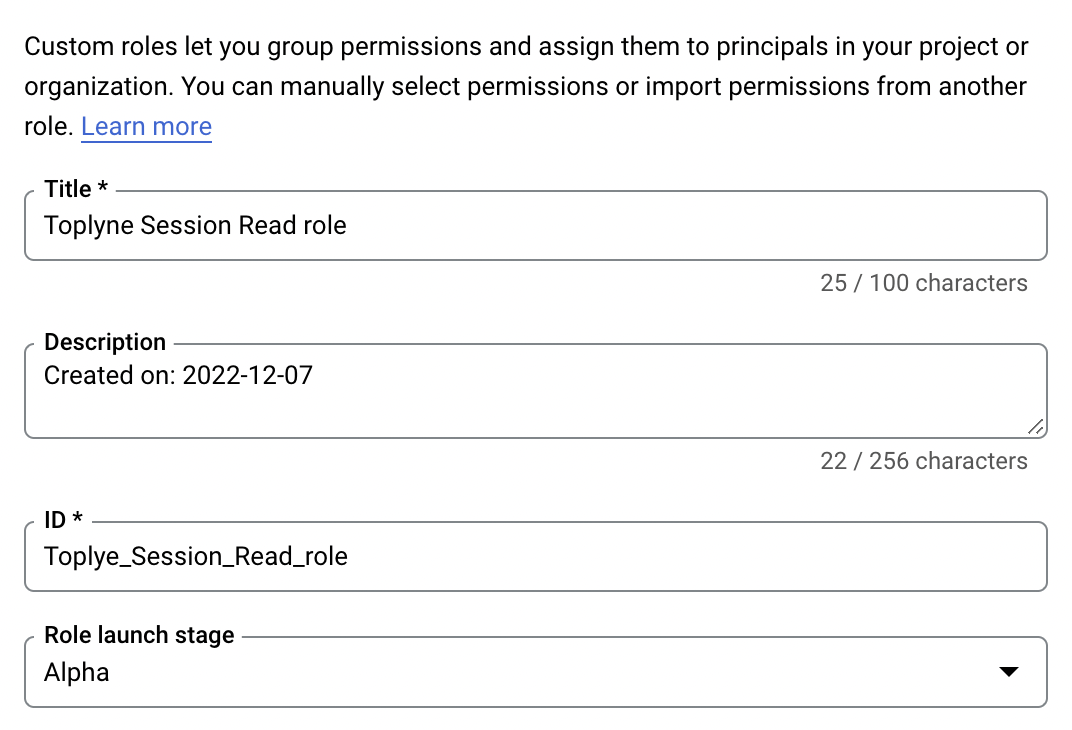

- Add Title As Toplyne Session Read role and ID as Toplye_Session_Read_role

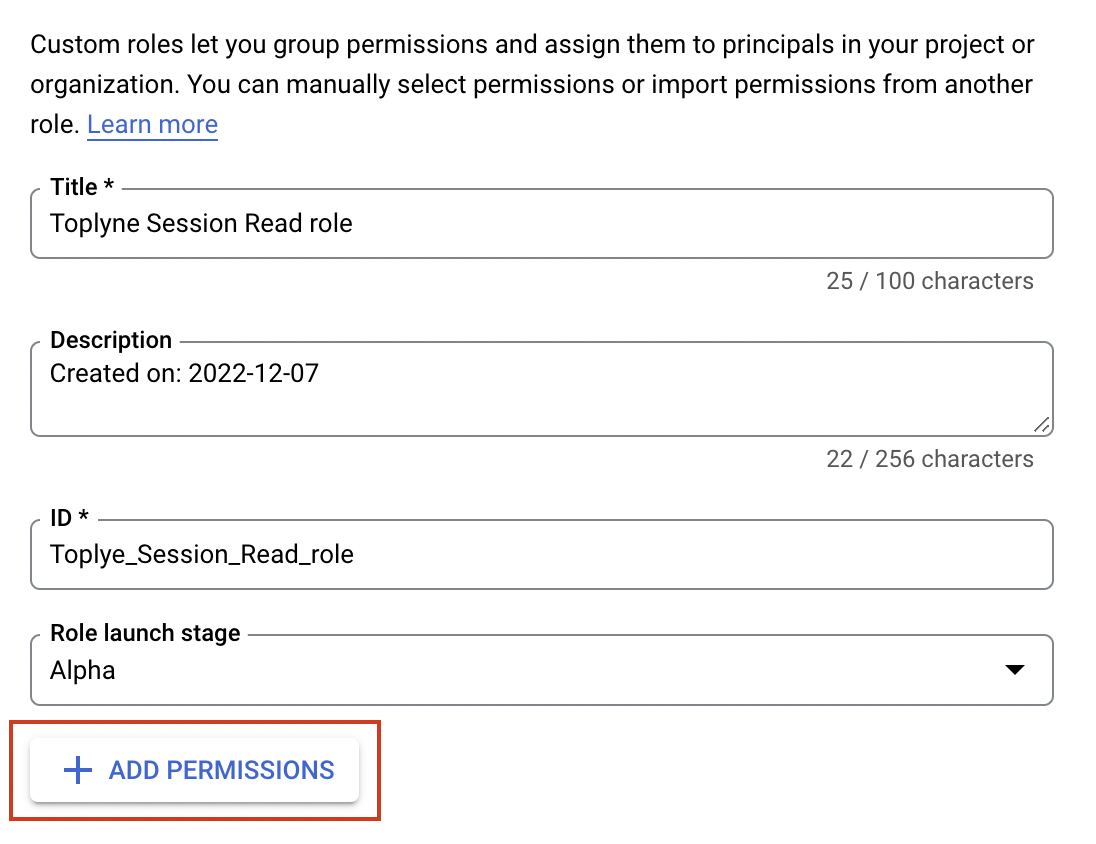

- Click on ADD PERMISSIONS

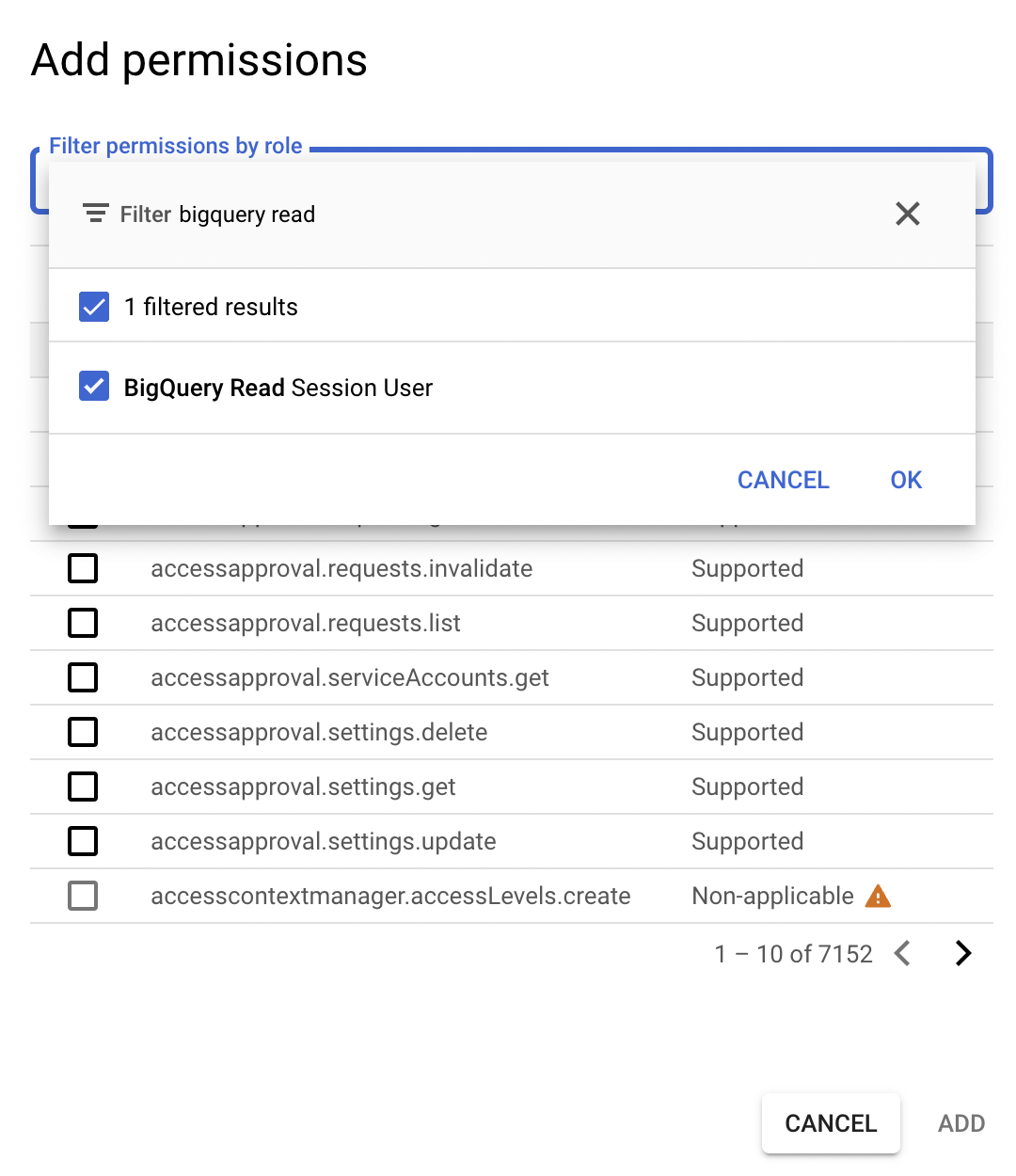

- Search for BigQuery Read Session User. Select the option and click Ok

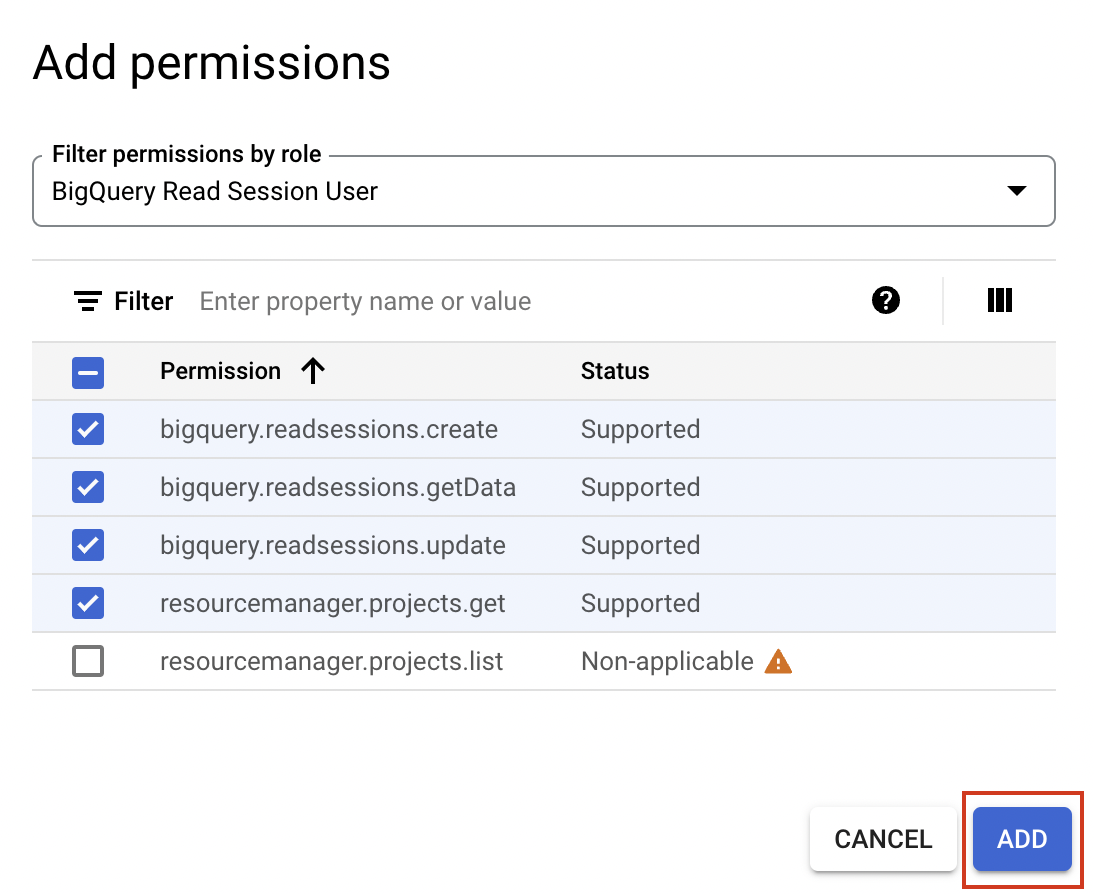

- Select all the supported roles and click ADD

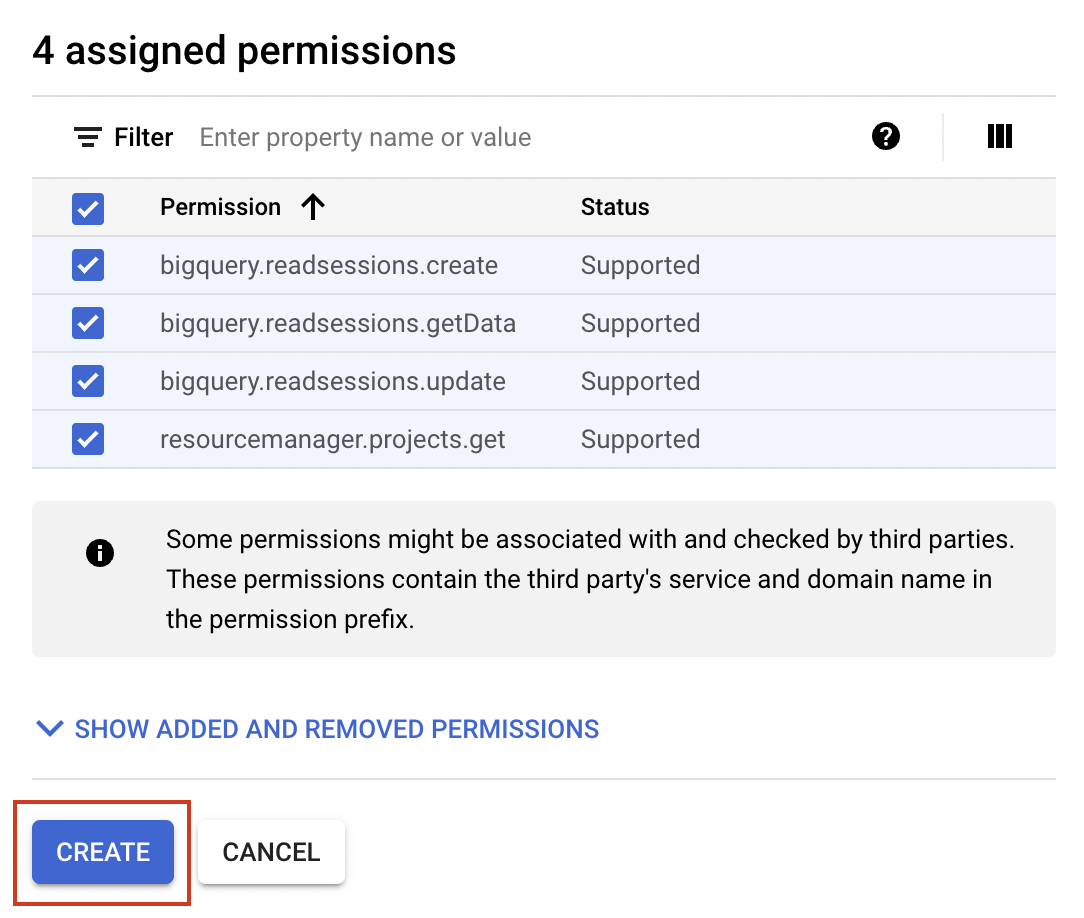

- Click on Create to create the role

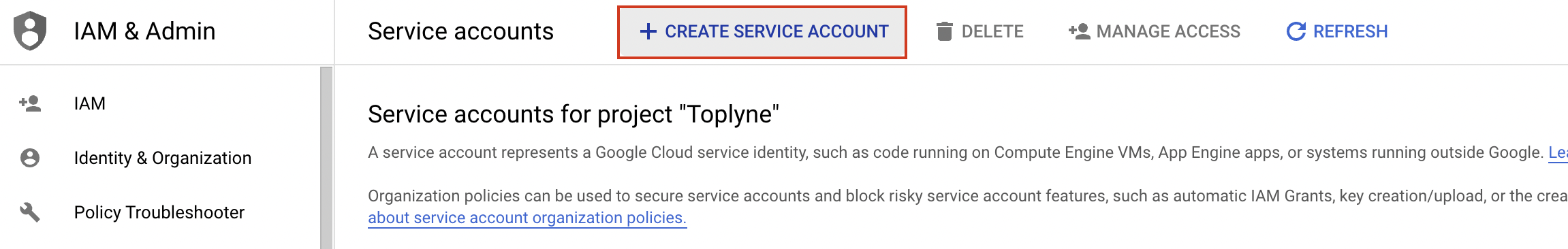

- Go To IAM & Admin -> Select Service Accounts

- Click on Create a Service Account

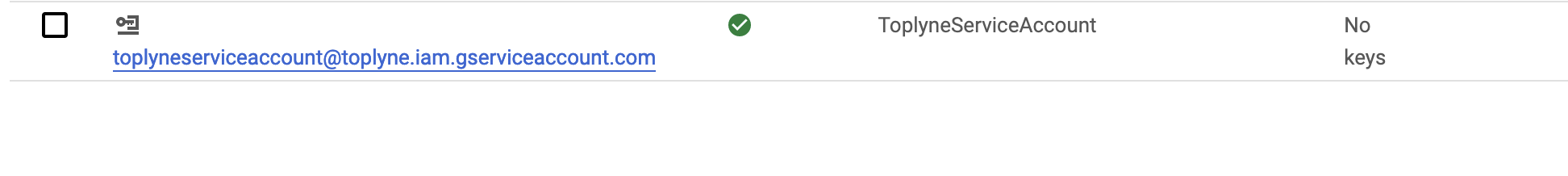

- Enter the Name ToplyneServiceAccount and Service account Id toplyneserviceaccount -> Click on Create and Continue

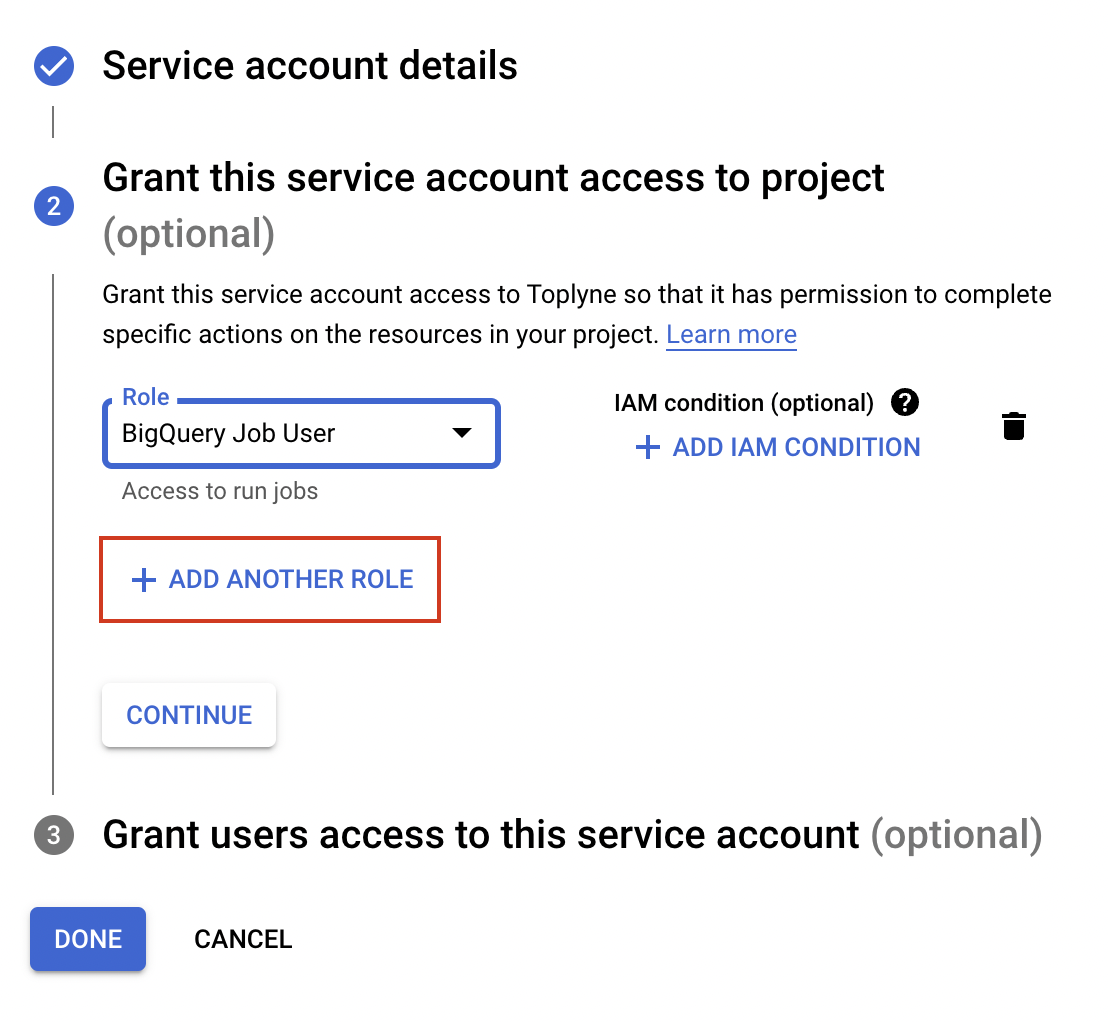

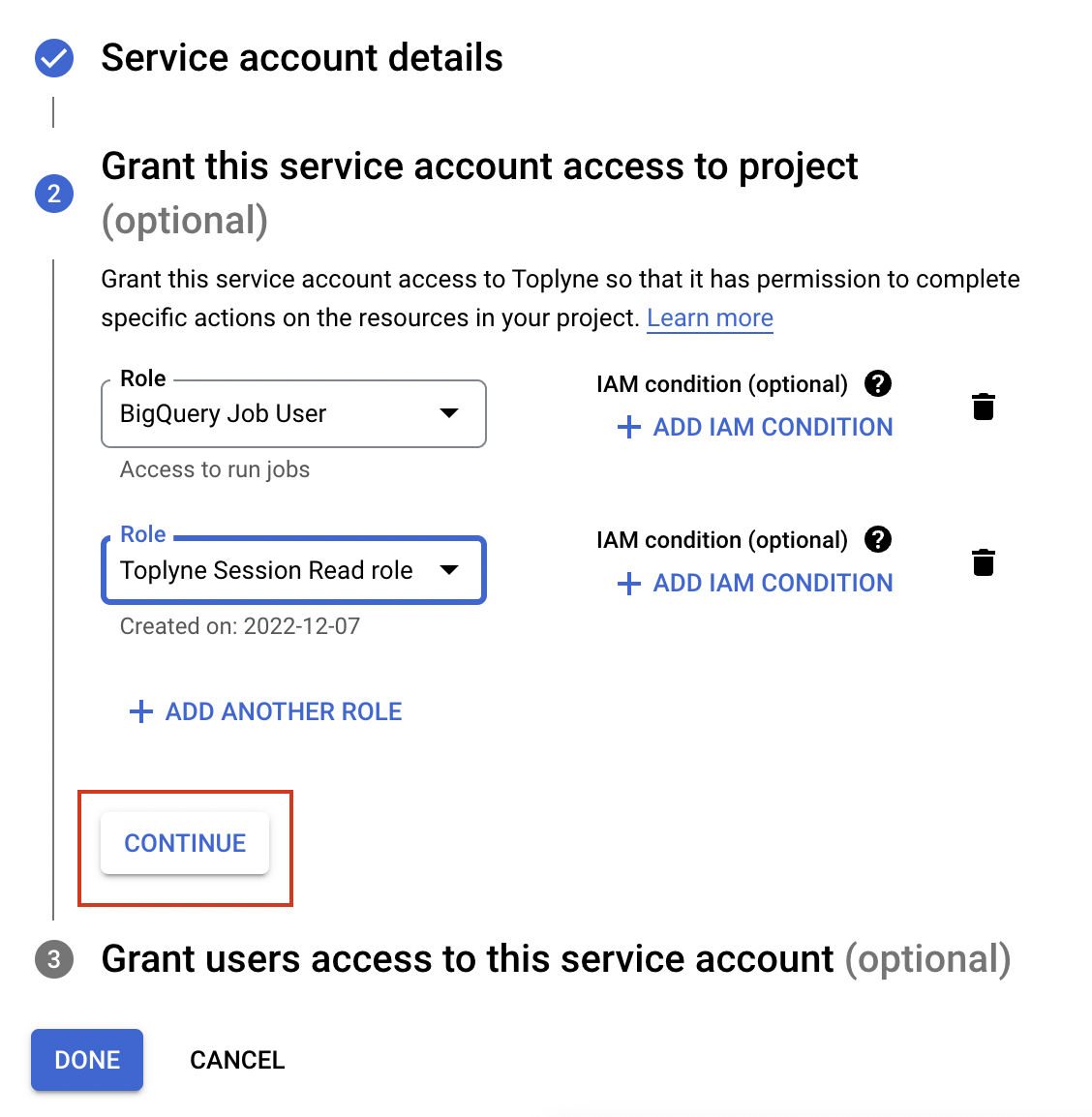

- In Role, add BigQuery Job User role and click on Add another rule

- In the role option, add Toplyne Session read role and click Continue followed by clicking Done

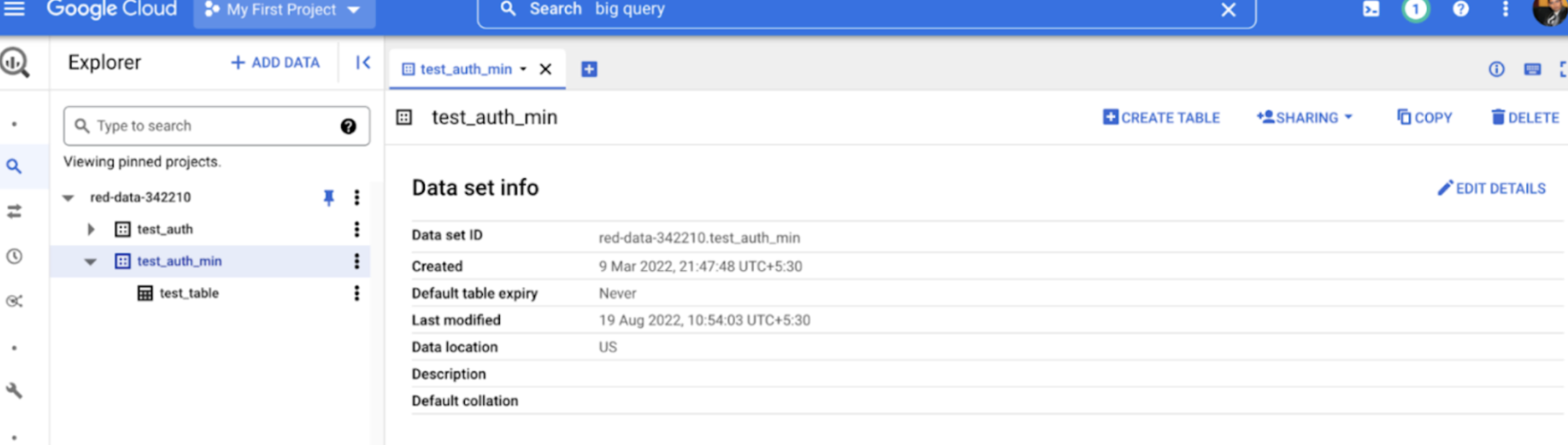

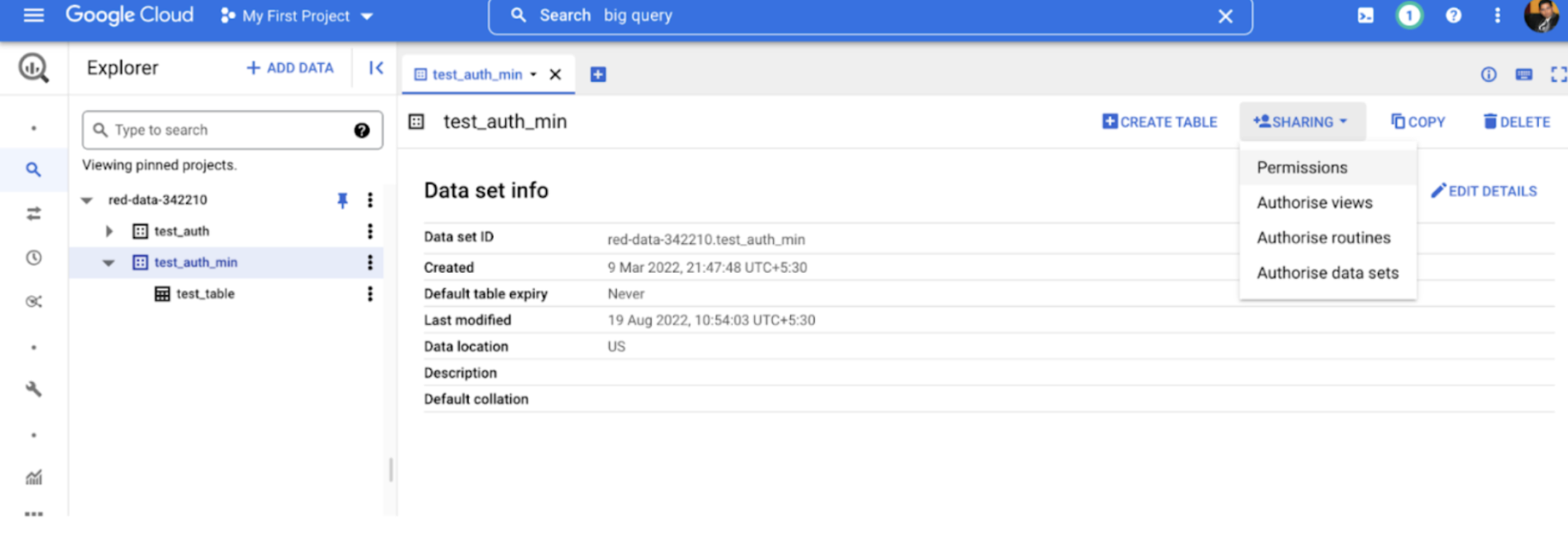

- Go to BigQuery and select your dataset.

- Navigate to Sharing -> click on Permissions

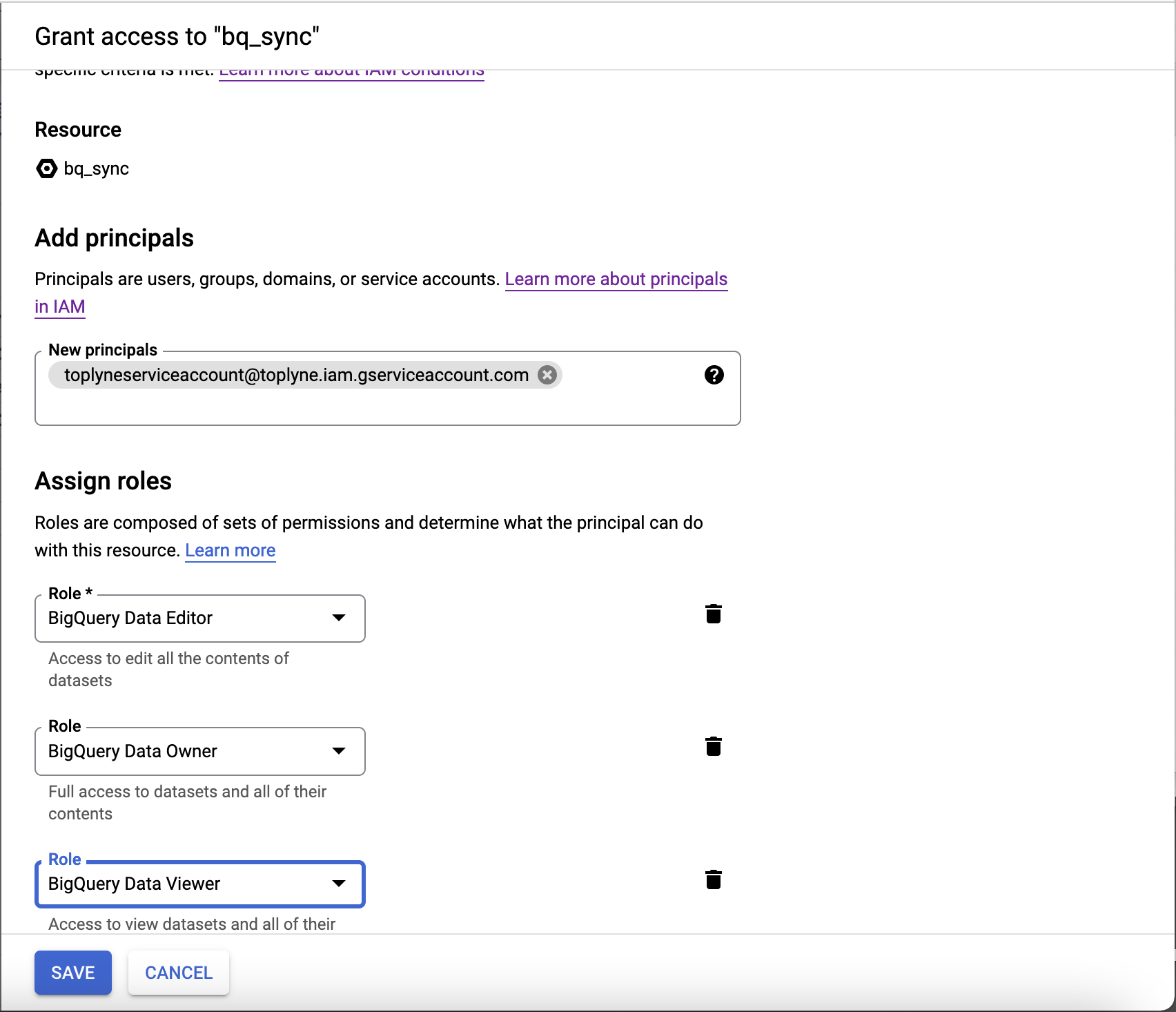

- Add Principal -> Enter service account email address you created in Step 10 -> Grant BigQuery Data Editor, Data Owner and Data Viewer Access -> Click Save

- Go back to IAM and select Service Accounts

- Select the service account that you have created in Step 10

- Go to Keys tab -> Add keys -> Create new key

- Download the key and share with us

Updated about 1 year ago